My Top 3 AI Image Generators (and why designers should use them)

Every month, a new AI image generation model drops, and the internet declares, “design is over.” It isn’t. At SSW, our designers continue to ship plenty of brand assets (not limited to just SSW, TinaCMS and YakShaver), faster than usual, because they’re treating AI like a junior assistant. An Assistant that takes instructions via prompts, gives variations, changes backgrounds, and fixes typography. They are not a replacement for good taste, brand judgment, or accessibility.

Not all AI tools are created equal, and some have come a really long way since their inception. There are a lot to choose from, but I’m going to look at the 3 that I think are awesome, what they’re good at, and the traps to avoid.

Do you know when you should use AI?

At SSW, we mostly use AI to help with our enterprise app code. Tools like Cursor and Copilot certainly accelerate coding. On the design side, AI can edit images when you need surgical changes and need them fast, e.g., remove a stray chair, extend a canvas, swap a background, generate consistent variations, harmonise style, or tidy text in signs.

As AI editing becomes standard, origin is essential. SynthID is an industry approach that embeds an imperceptible, pixel-level watermark at generation/edit time (in addition to any visible “AI” label). It’s designed to survive common transforms (compression, mild crops/brightness changes) and can be verified by compatible detectors.

You can read more about this in our SSW Rule: Do you use AI to edit images?

My Top 3 Image Editors/Generators

#1 – Google’s Nano Banana (Gemini 2.5 Flash image)

Nano Banana is a fun tool. It’s great for conversational edits, quick photo restyling, character consistency, and watermark-by-default workflows.

Why I like it:

- Conversational & fast – Great for multi‑step edits (“Change the jacket, keep the lighting”).

- Consistency – Solid at maintaining the likeness of a person across shots (handy for speaker cards and staff profiles).

- Great for mock-ups – You can generate a few on‑brand variations, then take the best one into Photoshop for proper finishing.

Cons:

- Watermarking is always on – All outputs include SynthID by default. Great for provenance (knowing the origin).

- Resolution & payload ceilings – Current docs highlight 1024-px generation and API limits e.g. 20 MB inline request size unless you use the Files API.

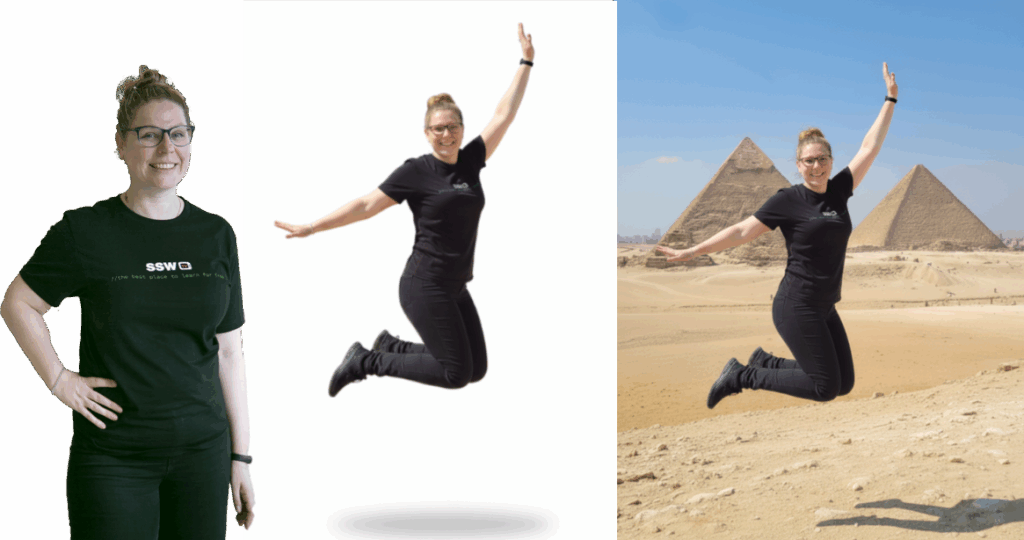

I recently asked the team at SSW to generate images of themselves using an existing photo, some were quite amusing, but they are also scary accurate.

#2 – Midjourney v6

Gen Alpha shouldn’t be afraid of its name, there is nothing mid about Midjourney! 🤣 It’s best used for hero, stylised key art with minimal edits.

Why like it:

- Outstanding images – The prettiest renders for concept art, moodboards, thumbnails, and hero images.

- Accuracy – Big jump in prompt fidelity and in‑image text compared to early versions.

- Easy to use – Handy editing tools (Zoom, Pan, Vary Region) for targeted fixes.

- Quick look‑development – you can spin up 12 ideas in 10 minutes and pick a direction before committing to final assets.

Cons:

- Discord Centric – while there’s a web editor now, Discord has more options.

- Less Privacy – your generations are public by default unless you use Stealth Mode correctly (and “private” images may still be visible in shared spaces unless you generate in DMs/private servers).

- Inpainting is good, not Photoshop-grade. The Vary Region (inpaint) tool is handy for tweaks, but precision edits can be hit-and-miss; many designers still finish surgical fixes in Photoshop.

#3 – OpenAI Images (DALL·E 3 / GPT‑4o Images)

Best known for prompt fidelity, legible typography, and chat‑first iteration in ChatGPT, Open AI images makes AI-generated images really accessible.

Why I like it:

- Accuracy – Excellent at getting the brief right (multi‑step, chatty refinement).

- Generates Text – Strong in‑image text (poster titles, signage) and designs from existing images.

- Fast compositing – E.g. “Combine this product PNG + this background + this lighting brief.”

Cons:

- Speed – Ultra‑specific realism can be slower than purpose‑built art models; be patient

- Precision – Editing is not Photoshop grade; retouch the images

- Brand consistency – Character likeness, props, and lighting can drift across batches.

Final thoughts

The winners aren’t the teams with the fanciest model, they’re the ones who standardise a workflow that designers love. Pick one tool for photo edits (Nano Banana), one for look‑dev (Midjourney), and keep ChatGPT’s Images handy for typography‑heavy images. Then document your AI processes in your design system and ship more with consistency.

What do you think of using AI in your design workflows? Have you had any good or bad experiences with AI images? I’d love to hear them. Drop me a comment below. 👇